Those who manage RFPs already know the truth that most teams learn the hard way: great decisions come from great scoring. Clear criteria, clean scorecards, and a simple way to compare vendors will save your team hours, reduce internal debate, and help you select partners with confidence.

In this extensive guide, we’re covering RFP scoring and weighted scoring, step-by-step instructions for setting up each method, and examples, best practices, and templates you can copy. You’ll also find tips for reading results and aligning stakeholders, a side-by-side comparison of simple scoring versus weighted scoring, and guidance on how to effectively implement either (or both) approaches within your organization.

Ready to make scoring feel less like a spreadsheet slog and more like a reliable, repeatable system your team trusts? Let’s get started.

RFP scoring basics

Before we dive into templates and tactics, let’s set shared definitions and expectations.

What is RFP scoring?

RFP scoring is the process of assigning numerical values to proposal responses, allowing you to compare vendors on a consistent scale. It replaces opinion with a structured, data-based approach and reduces the risk of bias. In strategic sourcing, where answers are often long and nuanced, scoring provides a quick view of strengths and weaknesses across multiple vendors.

Simple scoring and weighted scoring at a glance

There are two standard models for RFP scoring:

- Simple scoring: Every question or section carries equal value. Evaluators grade responses on a fixed scale, typically ranging from 1 to 5, using a shared rubric. It’s fast and easy. The downside? Low-importance questions can count just as much as critical ones.

- Weighted scoring: Questions or sections have different values. You apply percentages to reflect what matters most for the project, then score responses on a scale. This produces a clearer picture of “best fit” when trade-offs exist. It is more precise, but it requires a bit more setup work.

When should I use each approach?

- Use simple scoring for lower-stakes, low-complexity buys, pilot projects, or when all requirements truly carry equal importance.

- Use weighted scoring for strategic purchases, multi-stakeholder decisions, and any scenario with must-have versus nice-to-have requirements.

RFP scoring examples

Seeing a model beats reading about one. Let’s take a look at a straightforward five-point rubric, show how teams structure a vendor scorecard, and point you to ready-to-use templates. Feel free to use what works, remix the rest, and move faster on your subsequent evaluation.

A straightforward, simple-scoring scale:

Most teams use a five-point scale. Here’s a common rubric:

- Non-compliant

- Minimally compliant

- Partially compliant

- Mostly compliant

- Fully compliant

Keep each level short, specific, and observable, and include examples of what “good” looks like so scorers stay aligned.

A sample scorecard structure:

Many vendor scorecards evaluate along familiar categories. A helpful, eight-criterion pattern includes:

- Adherence to RFP instructions

- Company information

- Project understanding

- Requirements

- Product viability and history

- Terms and conditions

- Vendor demonstration

- Fee summary

Regardless of which option you choose or how much you tailor it to your specific needs, the key is consistency across vendors.

Templates you can use today:

Prefer to start from a proven format? These public templates show scoring structure in action:

- Vendor scorecard template from Smartsheet, based on eight criteria, with a completed example tab and a blank tab you can copy and use.

- A University of Wisconsin-Madison scorecard (Division of Business Services) with clear instructions for setting multipliers and scoring on a 0 to 5 scale.

Steps to ensure effective RFP scoring

Process makes or breaks scoring. A straightforward setup turns a pile of responses into a clean, defensible recommendation. The steps below take you from stakeholder alignment to a finished rubric, with guidance you can hand to scorers right away.

1) Set the stage during requirements discovery

Good scoring starts before you write a single question. Bring together the core stakeholders who will live with the outcome. Ask them to define success, identify must-have requirements, and agree on evaluation categories. Typical categories include background, functionality, pricing, implementation, technical needs, reputation, financial stability, and security. Your scorecard should mirror these buckets.

Practical tip: capture the “why” behind each requirement. This helps you write better questions and build a scoring rubric that aligns with intent, rather than relying on guesswork.

2) Define your approach, priorities, and scale

Decide early on whether weighted or straightforward scoring fits the purchase. If importance varies by category, switch to weighted scoring and assign each category a percentage weight that sums to 100. A common starting point might allocate 40 percent to functionality, 20 percent to reputation, and distribute the rest across risk-related topics like security and financial stability.

Ultimately, the exact breakdown may differ for your organization, but keep your scoring scale consistent —usually 1 to 5 —across questions and sections.

3) Provide clear direction for evaluators

Create a scoring matrix or rubric with example answers that illustrate what earns a 1, a 3, or a 5. Assign sections to subject matter experts rather than making every evaluator score every answer. Clarify who resolves discrepancies and how you’ll handle significant variances.

Optional: Use software to streamline the process

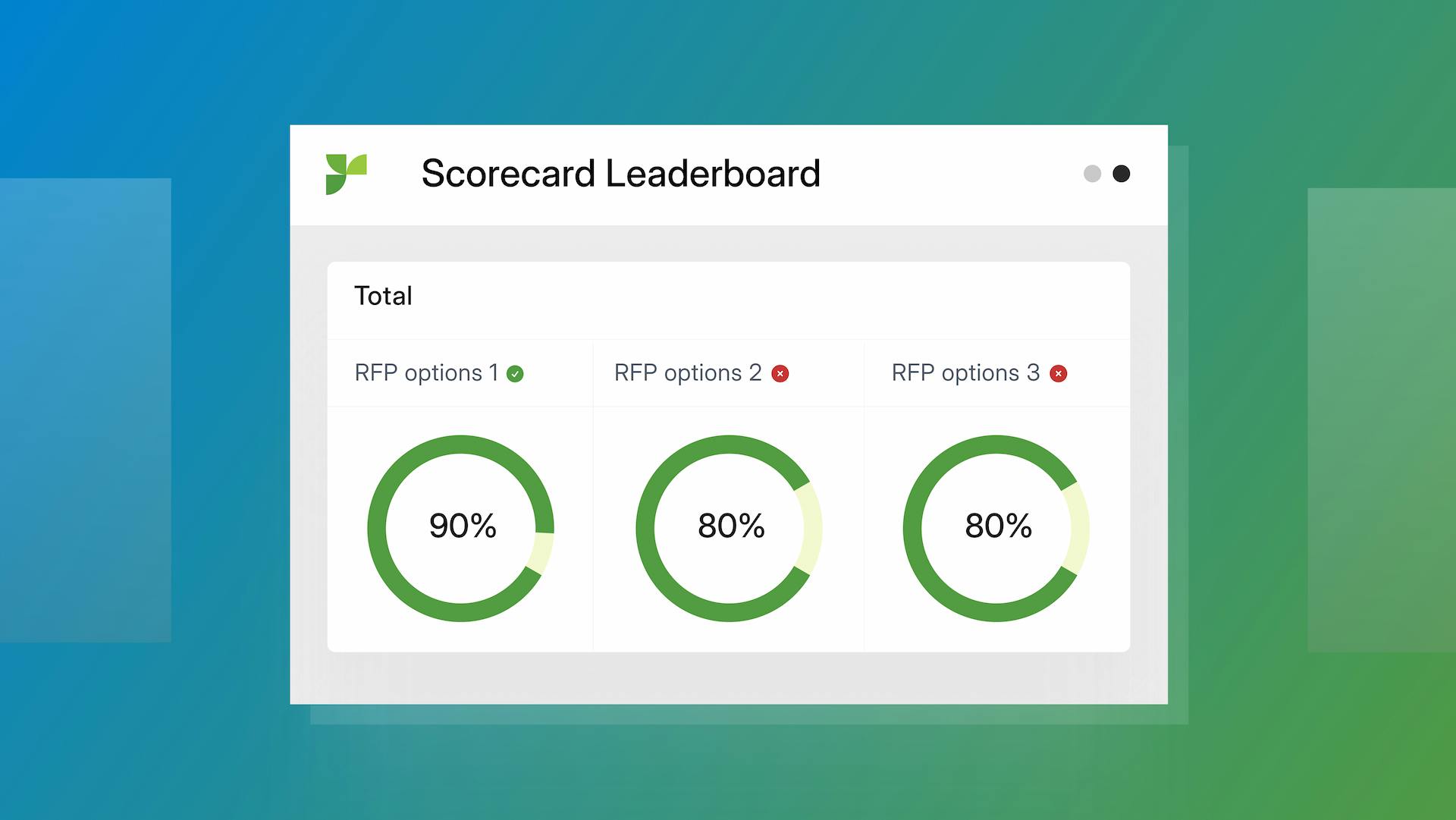

If you manage multiple stakeholders and multi-section RFPs, an RFP platform like Responsive can centralize scoring, apply weights automatically, and keep everyone on the same timeline. Responsive allows users to choose weighted or non-weighted scoring, assign sections to the right SMEs, and keep every evaluator on schedule with due dates and reminders.

Scoring best practices that reduce friction

You can avoid most scoring headaches with a short checklist. These best practices keep your process focused, fair, and fast. Use them as guardrails each time you plan an evaluation, especially when new stakeholders join the review.

- Write scoring criteria before you draft questions. You will write cleaner prompts and avoid collecting information you cannot use.

- Keep it brief. Shortlist a reasonable number of vendors and questions. Five vendors and twenty questions still yield one hundred answers per evaluator.

- Engage the right stakeholders. Invite diverse perspectives, but be intentional about who scores what. Align sections with expertise.

- Consider blind scoring. Remove vendor names and branding during the scoring phase to reduce bias, especially if an incumbent is involved.

- Publish your scoring approach in the RFP. Telling vendors what matters helps them focus on the most critical areas, which makes responses easier to compare.

- Leverage technology. Automate tabulation and weighting, then use dashboards to spot outliers, gaps, and trends.

How to interpret the results

Scoring produces a number, not a mandate. Your final choice should reflect the project's results and reality. It’s essential to know how to read totals, spot patterns in section scores, and explain your recommendation with confidence.

Start with the totals, then drill down

Begin with the overall score to shortlist your top options. Then look at the section-level scores for the story behind the totals. A vendor may lead on functionality and support while trailing on price, or vice versa. Use the section view to guide follow-ups, reference checks, and demos.

It’s okay if the top score is not the final choice

Sometimes, the highest-scoring vendor stretches the budget beyond reason or carries risks that your team cannot accept. Choosing a near-tie with a better price or a smoother implementation plan can be the right call. Either way, document the reasoning so future audits and stakeholders can see the trade-offs you made.

Normalize, reconcile, and communicate

Check for scorers who rate consistently high or low and normalize if needed. When there is a big gap on a critical question, bring the scorers together to discuss. Then, capture the decision logic in a summary for executives, including a chart or table that shows totals, section scores, and key comments.

RFP weighted scoring, step by step

We’ve already covered the basics of what RFP weighted scoring entails, so let’s now examine how to implement this method practically and what the results may look like.

A realistic weighted-scoring scenario

Suppose you are evaluating a platform purchase and agree that functionality and experience matter most, but cost and innovation also play a role. Your section weights might look like this:

- Approach: 10 percent

- Experience: 25 percent

- Functionality: 30 percent

- Innovation: 10 percent

- Cost: 25 percent

Within each section, you still score on your 1 to 5 scale. The weight multiplies the section’s average and contributes to the final result in proportion to its weight.

How to set up a weighted scoring model

Step 1: Establish your criteria. Meet with stakeholders, research the market, and list your ideal solution characteristics. Group the list by topic, then map that list to your RFP sections.

Step 2: Prioritize what is essential. Label each requirement as must-have, nice-to-have, informational, or out of scope. Sections with more must-haves should get more weight. By the end, your distribution should add up nicely to 100.

Step 3: Pick your process:

- Manual spreadsheets are fine for a few simple events. Be mindful of formula risk and version control.

- RFP software centralizes scoring, automates weights, and eases collaboration. It requires an investment, yet often pays off quickly in terms of speed and consistency.

Step 4: Carefully craft your questions. Use closed-ended questions whenever possible to ensure scoring is objective. For open-ended prompts, define example answers that map to low, medium, and high scores.

Step 5: Calculate and compare. Weighted scores make side-by-side comparisons simpler and more defensible. Once you have totals, sanity-check section-level patterns and reconcile any anomalies among scorers.

Weighted scoring best practices

- Refer back to your requirements document as you score to ensure you do not drift away from the goal.

- Share criteria and evaluation plans with vendors to improve response quality.

- Set a Q&A window so suppliers can clarify the scope before they write.

- Hide vendor names during scoring to reduce bias.

- Look for significant gaps between scorers and resolve them with a short review call.

Weighted scoring examples and templates

Once you see weighted scoring in a matrix, the method should click. These two templates show how weights affect the outcome without complicating the process. Use them as reference models, or adapt them directly for your next RFP.

Two widely used templates illustrate how to structure a weighted matrix:

- A weighted decision matrix: Expert Program Management (EPM) created this matrix to evaluate providers based on cost, service level, ease of termination, and more. The example shows that a higher-priced vendor can still win when they outperform on factors that matter most.

- A weighted criteria matrix: This weighted criteria matrix from GoLeanSixSigma includes a fillable template and a completed example that you can adapt for software or service selections.

Want a broader library to bookmark for later? Keep a folder of weighted templates that work well for your industry. It saves time and builds consistency across buying teams.

Simple scoring versus weighted scoring

Both methods have their uses. Let’s take a side-by-side look so you can choose the one that best suits the decision at hand. If the stakes are low and the priorities are flat, keep it simple. If trade-offs matter, go weighted.

Where simple scoring shines:

- Smaller, low-risk decisions

- Short RFPs with tightly scoped requirements

- Teams that are new to formal scoring

Where weighted scoring shines

- Complex, high-impact purchases

- Decisions that involve trade-offs

- Auditable processes with many stakeholders

How they differ in practice

- Precision: Simple scoring treats all questions equally. Weighted scoring concentrates value where it matters most.

- Setup effort: Simple scoring is faster to set up. Weighted scoring takes more thought up front, but it saves time during comparison.

- Transparency: Both can be transparent. Weighted scoring often feels fairer to stakeholders because it makes priorities explicit.

- Technology fit: Either works in spreadsheets. Weighted scoring benefits most from software because automation reduces errors and speeds up collaboration.

Bringing scoring to life in your organization

Good ideas stall without adoption. Let’s see how you can turn scoring from a one-off exercise into a shared habit through a checklist you can run every time, a few common pitfalls to avoid, and a simple way to present results that busy leaders can approve quickly.

Your quick-start scoring checklist:

- Confirm goals, risks, and success measures with sponsors.

- Agree on evaluation categories and the scale you will use.

- Choose simple or weighted scoring based on priority spread.

- Draft a scoring rubric with example answers for key questions.

- Assign sections to the right evaluators and set clear due dates.

- Decide on blind scoring and publish your approach in the RFP.

- Collect, normalize, and review results together.

- Document final decisions, trade-offs, and next steps.

Common pitfalls to avoid:

- Overlong questionnaires. Your scorers have day jobs. Keep the number of questions reasonable, and ask only what you will use.

- Vague rubrics. If evaluators cannot distinguish between a 3 and a 5, your scores will be noisy. Provide clear examples that illustrate the differences.

- Hidden priorities. If the cost is 10 percent and the security is 30 percent, say so. Vendors will write better answers, and your scoring will line up with your strategy.

- Spreadsheet traps. Complex formulas break, and version control gets messy. If your team runs frequent or complex RFPs, consider software like the Responsive platform.

How to present results so leaders can decide

Summarize your results in a single page:

- A ranked list of vendors with total scores

- A section-by-section bar chart for the top three

A short paragraph on the trade-offs and your recommendation - A note on budget alignment and implementation readiness

If you recommend the second-highest score for good reasons, call it out and explain the logic. Executives want a decision they can defend, not just a spreadsheet they can file.

Final thoughts

Scoring is where your RFP’s hard work pays off. Simple scoring gives you an efficient way to compare apples to apples. Weighted scoring helps you focus on what matters most. Both can be fair, transparent, and fast when you set expectations early, build a clear rubric, and guide evaluators through the process.

If your team wants to go further, consider how an SRM platform supports the whole journey. Centralized collaboration, reusable content, and built-in scoring tools turn scoring from a spreadsheet exercise into a shared, repeatable practice. It’s through the practical and continuous use of these potent platforms that better decisions become a habit.