Adopting AI can be a turning point for bid and proposal teams, but only if it’s approached with clarity and care. For bid and proposal teams managing high volumes and even higher expectations, integrating AI feels like both an opportunity and a disruption. It changes how teams work, collaborate, and deliver value, sometimes faster than they’re ready for.

That’s the reality many revenue teams are navigating today. AI has immense potential to accelerate and refine how teams write proposals, sharpen strategic messaging, increase win rates, demonstrate their value to executives, and generate revenue. But that potential only materializes when teams adopt AI with intention — and stick with it. One-off enthusiasm and top-down mandates aren’t enough. Sustained adoption requires confidence, clarity, and a culture that encourages experimentation.

The Responsive Podcast recently featured two leaders driving AI adoption in high-stakes environments: Alexis Hourselt, Compliance and Operations Leader at Microsoft, and Darrell Woodward, Director at Prosfora Solutions, a proposal automation consultancy. Their approaches are different, but the goal is shared: make AI stick in a way that helps people do better work.

What emerged from these conversations was a clear takeaway: successful AI adoption is all about creating safe, structured, even playful experiences that make AI feel useful, not threatening. When done right, AI transforms how teams work and how they think about their value.

Successful AI adoption starts with curiosity, not compliance

According to Responsive’s 2025 State of Strategic Response Management (SRM) Report, organizations are steadily moving from AI curiosity to AI commitment. This year’s data shows that 62% of organizations are either piloting or have fully implemented AI solutions to support revenue-generating activities. Another 28% are considering adoption.

Regardless of where organizations are currently on the AI adoption road, both the direction and destination are clear. All roads lead to AI.

When AI was first introduced, it was often done so through documentation or an all-hands announcement. The implication was usually: This is the future. Start using it. More recently, various organizations have been exploring new ways to introduce AI in a manner that uniquely suits their needs and aligns with both the organization's and employees’ expectations.

Alexis and the Proposal Center of Excellence – which manages a content library for over 21,000 users – took a different approach at Microsoft.

“We ran an internal challenge,” Alexis said. “We invited teams to experiment with Copilot and submit real use cases — what problems did it solve, how did it help, what did you learn?”

The Copilot challenge, which was essentially an experiment, framed AI adoption and learning as a game; however, due to the overwhelming positivity surrounding the challenge, Alexis and the team achieved surprising results.

“We got 13 unique [use cases] across the business,” she said. “And people had fun doing it.”

Fun is not a strategic metric. However, in this case, it led to an unexpected but welcome behavioral change. People were willing to try new things, make mistakes, and share what worked for them. “It wasn’t about being perfect,” Alexis said. “It was about exploration.”

Gamification, done well, lowers the stakes. It makes trying AI feel less like a test and more like a puzzle. That approachable and non-threatening framing matters, especially for technical, legal, or compliance-heavy teams who aren’t used to working out loud or taking creative risks.

As Alexis put it, “People will play with AI when the pressure is low. But they’ll keep using it when they see it’s actually making work easier.”

Key insights and takeaways:

- Start with exploration: Microsoft invites teams to experiment with AI and share real-world use cases. This exploration fostered organic learning and a sense of curiosity.

- Gamify the experience to encourage engagement: The framing of a “Copilot Challenge” lowered the stakes and made learning about the AI approachable.

- Prove AI adoption’s value through impact: Perfection shouldn’t be the goal with AI. Instead, as AI’s impact became more apparent, people’s curiosity and interest naturally shifted.

Make AI’s usefulness immediate and visible

Darrell Woodward comes from a different world. He spends his day in the trenches of proposal management consulting, but he sees the same dynamic: AI needs to prove its value early.

“I think the biggest, most obvious opportunity is automating the parts of the process that people don’t enjoy,” he said. “Chasing SMEs, formatting documents, reviewing for consistency — all the tedious stuff that adds friction but not value.”

When it comes to AI adoption, early wins matter. They create credibility. When people see AI saving time or improving quality, they start to trust it, and trust unlocks usage.

“You have to show it’s not just about doing more work faster,” Darrell said. “It’s about doing better work — with less stress and more focus.”

That shift is crucial. In bid and proposal teams, burnout is a common occurrence. Long hours, shifting deadlines, and unclear inputs all add up. Darrell believes AI can reset that culture.

“The old hero mindset — where you’re up all night finishing a proposal — it’s fading,” he said. “Leaders are finally thinking about wellbeing.”

If AI helps people reclaim time, reduce stress, and improve results, that’s not hype. That’s transformation, observable and provable through clear metrics, especially from tools like the Executive Dashboard found in the Responsive Platform.

Key insights and takeaways:

- Target early wins to solve real problems: Automate bottlenecks like SME coordination, formatting, and consistency reviews. Quick wins help remove friction.

- Build credibility with clear results: Time saved or improved proposal quality helps demonstrate AI’s value, as shown clearly in Responsive’s Executive Dashboard.

- Reframe AI as a culture shift: Move away from “hero culture” (late nights, reactive firefighting) to support employee well-being, made easier with AI assistance.

Make AI approachable, not intimidating

Training alone isn’t enough to make AI stick. People also need to feel comfortable using it. One reason that’s possible? AI doesn’t take things personally. It has no ego.

“It doesn’t get offended. You can ask AI the same question 10 different ways. You can say, ‘This is garbage, try again.’ And it’s fine.”

That creates a different kind of workspace. Junior team members can brainstorm freely, and experts can test ideas without judgment. “It’s a sounding board,” he said. “And that’s really powerful.”

Alexis echoed this sentiment. “AI isn’t replacing people — it’s extending them. But people need to feel safe being wrong with it. Otherwise, they’ll just avoid it.”

To make that kind of open engagement possible, teams need thoughtful guardrails, like dedicated sandbox environments, peer demos, or visible support from leaders who are willing to experiment out loud. When people see AI being used openly and without pressure, they’re more likely to engage on their own terms — and to view it positively.

According to our own research data, the organizations seeing the greatest YoY revenue growth are proving that pairing AI with people drives better results and keeps teams happier. Among these high-growth organizations, 52% either fully deployed or have trialed AI solutions alongside their bid and proposal teams.

Whatever the ideal solution for each team or organization, the idea is to make people and teams feel comfortable using AI from the outset in a way that ensures they feel at ease, not threatened. People should feel excited to learn and grow alongside the tool that will help them succeed in their careers moving forward. If you want AI to stick, start by making sure it feels like a tool, not a trap.

Key insights and takeaways:

- Use AI’s ego-free nature to encourage creativity: Promote AI as a risk-free sounding board, especially helpful for junior team members or cautious contributors.

- Model experimentation from the top: Leaders should actively engage with AI in visible ways. When leadership sets the example, others are encouraged to follow.

- Frame AI as an extender, not a replacement: Reinforce that AI supports and amplifies human work. People are more likely to adopt new tech when they feel safe using it.

Curation is what makes AI trustworthy

While excitement and ease matter, Darrell and Alexis both pointed to something more foundational: content governance.

“The usage spike came after we connected AI to our central content library,” Alexis said. “Before that, people didn’t trust what they were getting back.”

For both, the quality of content was a recurring theme. It’s not just what you ask AI; it’s what you give it to learn from. We have found, through extensive conversations with our customers, that a well-maintained and accurate content library consistently yields more accurate AI-generated responses. This is because the AI only draws from the specific, trustworthy, and up-to-date content available in your approved content library.

Darrell was much more blunt: “If your content is a mess, AI will just make that mess louder.”

That’s why he prioritizes curation and why he encourages everyone else to do the same. “You don’t need to give AI everything — you need to give it the right things. Clean, approved, reusable content. That’s what makes AI answers accurate and usable.”

Alexis agreed. “We saw real adoption once people knew the AI was pulling from the same source they already trusted.”

This is especially critical in compliance-driven industries, where accuracy isn’t optional. But it’s just as true for sales, proposals, or product marketing, where voice, tone, and differentiation can win or lose the deal, either as the sole determining factor or one of many.

Key insights and takeaways:

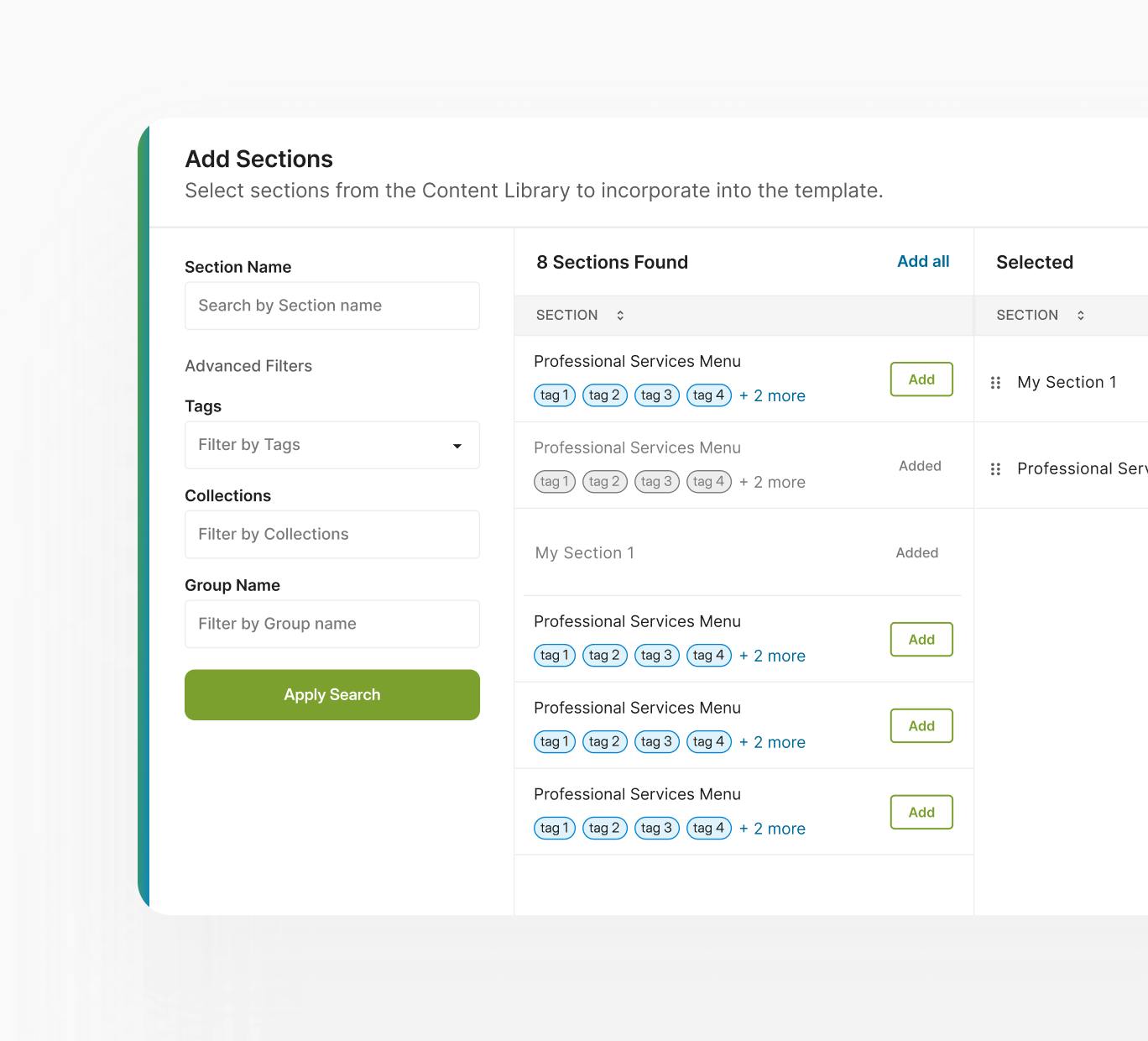

- Connect AI to a trusted content library: People only trusted the AI once it pulled answers from a vetted, central source they already relied on.

- Curation beats volume in AI readiness: You don’t need to give AI everything. Instead, focus on giving AI the right, clean, approved, and reusable content.

- Content quality directly shapes AI output: Poorly maintained content leads to inaccurate, low-value responses. This is true regardless of how sophisticated the AI is.

Treat AI adoption as an experience, not an endpoint

If there’s one thing Alexis and Darrell both emphasized, it’s that AI adoption isn’t about hitting a milestone. It’s about building momentum that teams and organizations can capitalize on and keep going for years.

And the fast returns from AI can make it easier to get buy-in from both teams and leadership. According to the 2025 State of SRM Report, two-thirds of organizations report achieving positive ROI from AI adoption within 12 months.

However, even with that fast ROI, long-term AI adoption requires effort. “You don’t launch AI and walk away,” Alexis said. “You support it. You spotlight success. You keep making it easier to use.”

Darrell takes a similar view. “You have to show people where it helps, invite them to try, and then keep the feedback loop going.”

In other words, AI adoption is a cultural shift. Culture doesn’t change through mandates. It changes through moments. Culture changes when and where people feel seen, supported, and excited to do something differently. And what’s the most significant catalyst for change now? AI.

That’s what Alexis did with Microsoft’s Copilot Challenge. That’s what Darrell does with his SME support strategies. And it’s what Responsive empowers with purpose-built AI workflows that respect context, compliance, and creativity.

Key insights and takeaways:

- Highlight wins to build long-term momentum: Showcase success stories to reinforce value and encourage broader team and cross-team engagement.

- Create an open feedback loop: Encouraging feedback helps refine use cases and builds a sense of ownership across teams.

- Design programs that blend support with curiosity: Initiatives like internal challenges or SME-driven pilots make experimentation fun and normalize learning.

Allow AI to make work better, not just faster

As more organizations adopt Strategic Response Management (SRM) platforms powered by AI, the goal should not be limited to efficiency alone. It should be empowerment.

“AI should make your job more enjoyable,” Darrell said. “That’s what makes it stick.”

Alexis added: “It’s not about being futuristic. It’s about being useful — right now.”

For proposal teams, sales reps, compliance leaders, and SMEs, that usefulness manifests in different ways. However, the underlying principle remains the same: if you want AI to work, it must work for people. Make it safe. Make it fun. Make it strategic. In return, you’ll turn AI from a question mark into a competitive advantage.