Organizations across industries receive an ever-growing volume of requests for proposals (RFPs), security questionnaires, due diligence questionnaires (DDQs), and other complex information requests. These documents often contain hundreds of questions that require accurate, compliant, and timely responses. Traditional manual processes involve searching through past proposals, coordinating with subject matter experts across departments, and spending hours copying and pasting content from various sources.

AI-powered response management software transforms this labor-intensive process by automating content retrieval, generating draft responses, and streamlining collaboration workflows.

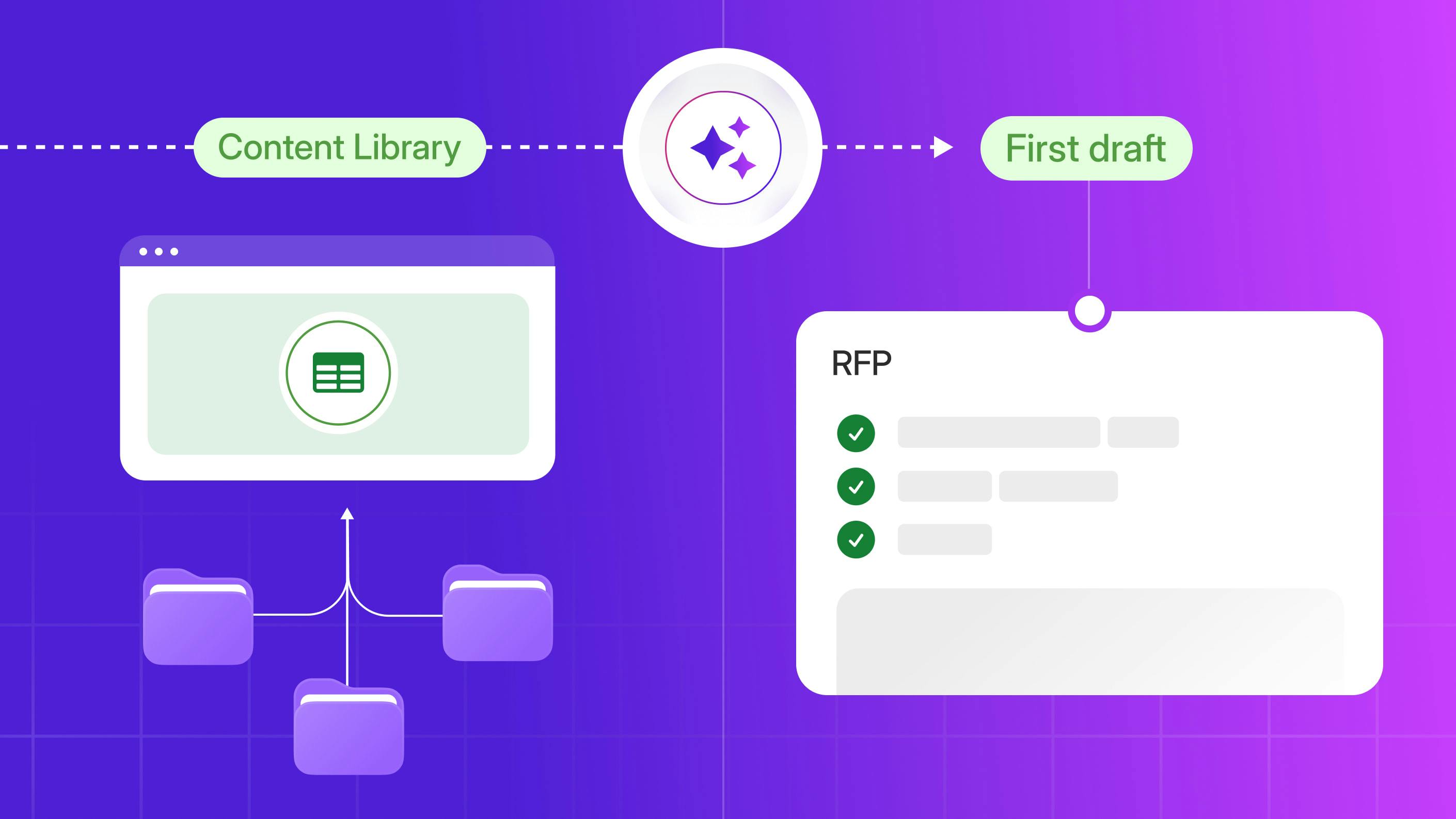

Automated content generation

AI systems can scan an organization’s existing knowledge base, otherwise known as content libraries, to generate draft responses to RFP questions through a multi-step process. First, the technology parses incoming questions to understand their intent and key topics. Next, the system searches the organization's content repository using both keyword matching and semantic understanding to identify relevant previous answers. Finally, it combines information from multiple sources, adapts the tone and length based on user-specified prompts and instructions, and produces a draft response for review.

The effectiveness of this approach varies significantly based on several factors. Companies using these systems report that AI can pre-populate question-and-answer content for certain types of requests, particularly security questionnaires and compliance documents, where questions are standardized and repetitive. For more complex, strategic questions that require customization or recent information, AI-generated content excels when supported with a human-in-the-loop review process and fine-tuned prompting to generate the best responses.

AI content generation works by analyzing patterns across thousands of previously answered questions. The best of these systems do so by pulling in only content from a moderated, up-to-date, and accurate knowledge base (using no outside information available on the wider Internet) to write responses based solely on your organization’s knowledge.

When a new RFP arrives, these AI generation systems identify questions similar to those answered before and retrieve the most relevant responses. Some platforms, such as the Responsive platform, can also pull from technical documentation, product specifications, and other approved company materials to construct comprehensive answers. Writing style prompts, instructions, and adjustments to make content more concise, technical, or accessible are made during the human review process based on the user-specified target audience.

Speed represents one significant advantage. Tasks that previously took five to ten minutes per question (searching the content library, finding relevant passages, and merging information from multiple sources) can now be completed in a fraction of the time using AI assistance. Proposal teams can redirect this time savings toward higher-value activities: strategy development, personalization, and quality assurance.

Content library management

AI tools help organizations maintain centralized repositories of approved responses, technical documentation, and company information. These systems transform unstructured content scattered across various file systems, email threads, and individual computers into a searchable, organized knowledge base that serves as the foundation for AI-generated responses.

This technology makes content searchable in multiple ways. Basic keyword search allows users to find answers containing specific terms. More advanced semantic search understands the meaning behind queries, so a search for "data protection protocols" might surface content about encryption, access controls, and security certifications even if those exact words weren't in the query. Some systems also support natural language questions, allowing users to type "How do we handle customer data?" and receive relevant content.

Building an effective content library requires an upfront investment. Organizations need to digitize existing proposal content, establish consistent tagging and categorization systems, and define clear ownership and review processes. Large enterprises often maintain repositories with 15,000 to 25,000 documents or Q&A pairs.

Microsoft's proposal team, for example, built a repository of approximately 20,000 documents over five years, which Microsoft uses as the source material for AI-generated responses. Microsoft estimates this repository saves the company $6 million annually in employee productivity.

AI continuously monitors content quality and freshness. It can identify duplicate or outdated answers, flag content that hasn't been reviewed recently, and suggest which Q&A pairs should be updated based on changes in products, services, or regulations. Some platforms provide dashboards showing content health metrics (tracking which answers are used most frequently, which need updating, and where gaps exist in the knowledge base). Teams use these insights to prioritize content maintenance efforts and ensure accurate, current information feeds the AI.

Response personalization

AI systems can adjust the tone, style, and level of detail in responses based on specified user prompts, elevating AI-generated responses beyond simple template-based approaches. Users provide instructions about multiple dimensions of the desired output: writing style (formal, conversational, technical), target audience (C-suite executives, IT professionals, procurement teams), level of technical detail, desired length constraints, and specific messaging themes to emphasize.

Processing these parameters along with the source content, the AI generates responses tailored to the specific context. For instance, the same underlying product information might be presented as a high-level business value summary for executives or as detailed technical specifications for IT evaluators. Win themes (strategic messages about differentiation, competitive advantages, or alignment with the buyer's stated priorities) can be incorporated throughout the response.

This capability addresses a common challenge in RFP management: maintaining content libraries with multiple versions of the same answer for different audiences creates significant overhead and increases the risk of inconsistency. With AI-driven personalization, teams can support a single authoritative version of each answer and let the technology adapt it as needed. Some organizations report reducing content library maintenance time by 50% through this approach, as they no longer need to create and update separate versions for different contexts.

Personalization extends to language and compliance requirements. Responses can meet specific formatting requirements, adhere to character or word count limits, and include or exclude certain types of information based on the RFP instructions. For global organizations, the same base content can be adapted for different regulatory environments or cultural contexts while maintaining the core message and accuracy.

Workflow automation

These platforms typically include comprehensive project management features that coordinate the entire pursuit lifecycle. The workflow begins when teams upload RFP documents in various formats (Word, Excel, PDF, or even scanned images). The AI automatically parses these documents to identify and extract individual questions, sub-questions, tables, and requirements, organizing them into a structured format that teams can work with efficiently.

Intelligent question assignment follows. Rather than manually determining which subject matter expert should answer each question, the AI can analyze the content of each question and suggest the best SME and reviewer for each question, based on their expertise areas, past contributions, or departmental responsibilities. For example, security questions go to the InfoSec team, pricing questions to finance, and technical architecture questions to engineering.

Throughout the response process, the platform tracks completion status in real-time. Managers can see which questions have been answered, which are in review, which require additional input, and which are approaching deadlines. Automated notifications alert reviewers when responses are ready for their input and remind contributors about upcoming deadlines. Status meetings and follow-up emails, which traditionally consume significant time, become unnecessary with this visibility.

Review and approval cycles also benefit from workflow automation. Organizations can configure multi-stage review processes in which responses move through legal review, technical review, executive approval, or other checkpoints, based on question type, content sensitivity, or deal size. The system enforces these workflows automatically, ensuring compliance with internal processes without requiring manual oversight.

Platforms like Responsive have users who report reducing average response time by 40% through these workflow efficiencies, as delays caused by unclear responsibilities, missed handoffs, and communication gaps are eliminated.

Multilingual capabilities

AI-powered RFP systems can generate responses in multiple languages by translating content from the source library, enabling global organizations to respond efficiently to opportunities in different markets. Modern platforms support answer generation in over 20 languages, including major business languages such as Spanish, French, German, Mandarin, Japanese, and Portuguese, as well as regional languages important for specific markets.

The translation process works in several ways depending on the platform's capabilities. Some systems translate existing English content into the target language on demand. More sophisticated approaches maintain parallel content libraries with professionally translated versions of key content, which the AI then adapts and personalizes for specific RFP questions. The most advanced systems can understand questions posed in one language, retrieve relevant content from libraries in multiple languages, and generate responses in the language requested by the customer.

This capability is particularly valuable for global enterprises that respond to RFPs from multinational corporations or government entities requiring responses in local languages. Rather than maintaining separate proposal teams for each language or relying on external translation services that delay response times, organizations can leverage AI to produce initial translations quickly. The technology understands context and industry terminology, producing translations that maintain technical accuracy and appropriate tone.

Integration with business tools

These platforms integrate with common business applications to embed RFP capabilities directly into the tools teams use daily, eliminating the need to switch between systems constantly. The integrations fall into several categories, each serving specific workflow needs.

Productivity suite integrations connect with Microsoft Office applications—Word, Excel, Outlook, PowerPoint, and Teams—and Google Workspace. Users can access the content library and AI-generated responses directly within these applications. For example, a salesperson drafting a proposal in Word can search the knowledge base and insert approved content without leaving the document. Email integrations allow teams to respond to ad hoc customer questions by pulling verified answers directly into their email responses, ensuring consistency and accuracy.

CRM integrations link RFP activities to broader sales processes. When connected to Salesforce, HubSpot, Microsoft Dynamics, or other CRM platforms, the system can automatically create RFP projects from opportunities, track proposal activity against deals, and feed win/loss data back into the CRM for pipeline analysis. This bidirectional flow ensures that proposal teams understand the sales context of each RFP and that sales teams maintain visibility into proposal progress.

Cloud storage integrations enable teams to pull source material from repositories like SharePoint, Google Drive, Dropbox, and Box. Rather than duplicating content, the AI can reference documents stored in these systems, ensuring responses always reflect the most current information. Some platforms offer API access for custom integrations, allowing organizations to connect internal systems, databases, or other proprietary tools.

Collaboration platform integrations with Slack, Microsoft Teams, and similar tools enable real-time notifications and discussions. Team members receive alerts about new assignments, approaching deadlines, or questions requiring their input directly in their collaboration tools. They can often complete simple tasks—like approving a response or answering a question—without leaving these platforms.

These integrations significantly reduce friction in the response process, as contributors don't need to log into separate systems or constantly check their email for updates. The result is faster response times and higher engagement from subject matter experts who might otherwise deprioritize RFP work.

Document analysis

AI can analyze incoming RFP documents to extract questions, identify requirements, and estimate the effort required to respond—a process often called "shredding" in proposal management. This analysis happens automatically when documents are uploaded, saving teams hours of manual work that would otherwise be spent reading through lengthy documents and creating question lists.

The AI performs multiple types of analysis simultaneously. It identifies and extracts individual questions and sub-questions, even when they're embedded in paragraphs of explanatory text or scattered across complex table structures. It recognizes different question types—yes/no questions, narrative responses, technical specifications, pricing tables, and radio buttons and checkboxes—and formats them appropriately for response. The technology also captures metadata like section numbers, page references, and formatting requirements that teams need to produce compliant submissions.

Beyond simple extraction, the AI evaluates document complexity and content. It can identify how many questions require new content versus how many can be answered from existing library materials. It flags unusual requirements, contradictory specifications, or ambiguous language that might require clarification from the buyer. Some systems analyze the strategic fit by comparing RFP requirements against the organization's capabilities, service offerings, and past performance.

This analysis supports go/no-go decision-making, which is critical for organizations that receive more RFP opportunities than they can pursue. The AI can provide preliminary assessments of win probability based on how well the organization's capabilities match the stated requirements, the effort required, and patterns from similar past opportunities. Decision-makers can review this analysis along with the extracted requirements to make informed choices about which opportunities to pursue—often within hours rather than the days traditionally required for thorough RFP review.

For organizations considering these tools, the decision should be based on a realistic assessment of their RFP volume, the repetitiveness of questions they receive, their current content organization, and their capacity to invest in building and maintaining a quality content library. Those with high RFP volumes, standardized questions, and commitment to content governance typically see strong ROI. Those with low volumes, highly customized requirements, or limited resources for content management may find the benefits don't justify the investment.

The key is matching the technology capabilities to the organization's specific needs and constraints rather than adopting AI simply because competitors are doing so.